LeCun et al. - Convolutional Neural Networks (CNNs)

Yann LeCun's early work on convolutional neural networks established the dominant architecture for visual pattern recognition, from handwritten digits to modern computer vision.

The development of Convolutional Neural Networks (CNNs) by Yann LeCun and colleagues in the late 1980s and 1990s marked a critical step in making neural networks practical for real-world tasks. By embedding spatial structure into the network architecture, CNNs became the go-to models for image recognition, document processing, and eventually computer vision.

The canonical CNN paper - Gradient-Based Learning Applied to Document Recognition (1998) - introduced LeNet-5, a deep convolutional architecture trained with backpropagation to recognize handwritten digits.

Historical Context

CNNs emerged from the need to scale neural networks to complex input spaces like images. Early neural nets such as the Perceptron and multi-layer perceptrons (MLPs) lacked the ability to model local patterns efficiently.

LeCun integrated three key ideas:

- Local receptive fields: Neurons connected only to small regions of the input.

- Weight sharing: Filters (kernels) applied across the entire image.

- Subsampling (pooling): Reducing spatial resolution while preserving features.

These mechanisms drastically reduced the number of parameters, made translation invariance possible, and enabled learning from image data.

Technical Summary

A typical CNN consists of:

- Convolutional layers: Extract local patterns using learnable filters.

- Activation functions: Usually non-linear (e.g., ReLU) to introduce complexity.

- Pooling layers: Downsample feature maps to reduce computation and overfitting.

- Fully connected layers: For final classification or regression.

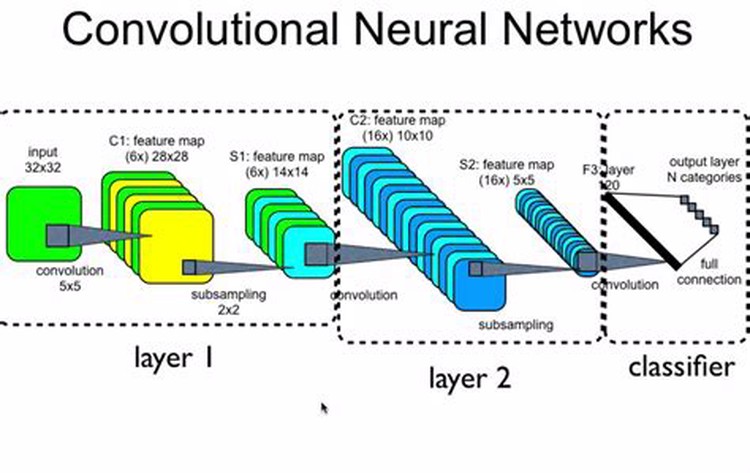

Example: LeNet-5 Architecture

- Input: 32x32 grayscale image

- Two convolution + pooling layers

- Two fully connected layers

- Output: 10-way softmax for digit classification

CNNs are trained using backpropagation and stochastic gradient descent (SGD).

Impact and Applications

LeNet achieved state-of-the-art performance on digit recognition tasks such as MNIST. But CNNs truly took off in the 2010s with the advent of large datasets (e.g. ImageNet), GPU acceleration, and deeper architectures like AlexNet, VGG, ResNet, and EfficientNet.

Modern CNNs are used in:

- Medical imaging

- Object detection and tracking

- Facial recognition

- Self-driving vehicles

CNNs also serve as components in more complex systems, including encoder blocks in encoder-decoder architectures and as image encoders in GANs .

Related Work

- Backpropagation : Enabled end-to-end CNN training

- Perceptron : Predecessor lacking spatial structure

- Deep Belief Nets : Alternative unsupervised pretraining

- GANs : CNNs used in generators and discriminators

Further Reading

- LeCun, Y., Bottou, L., Bengio, Y., & Haffner, P. (1998). Gradient-Based Learning Applied to Document Recognition. Proceedings of the IEEE.

- LeCun, Y. (1989). Generalization and network design strategies. Connectionist Models Summer School.

- Evolution of Model Architectures overview

- Tags:

- Papers

- Architectures