Evolution of Model Architectures

From the Perceptron to Transformers, this article traces the historical progression and key design shifts in neural network architectures.

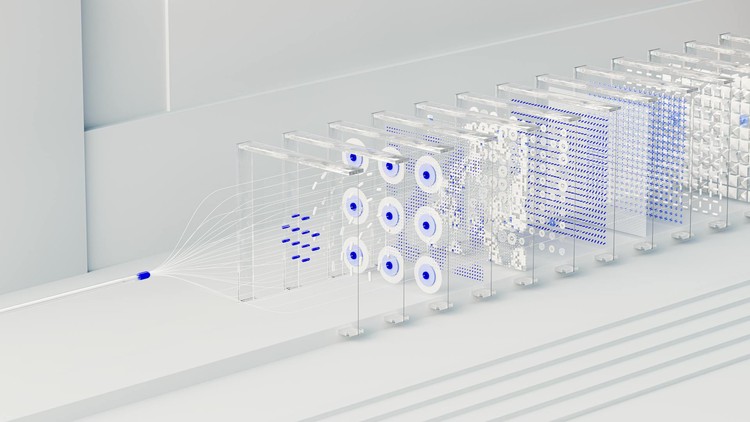

The evolution of neural network architectures over the past eight decades reflects the growing sophistication of both the tasks we demand of machines and our understanding of how to build models that learn. From the symbolic reasoning of early AI to the statistical learning of neural networks, and finally to large-scale, pretrained foundation models, each step forward introduced new capabilities—and new challenges.

This article surveys the major architectural milestones covered in the Papers and Architectures cluster, connecting the dots from artificial neurons to modern Transformers.

Early Foundations

- McCulloch & Pitts (1943) : Introduced the idea that logical operations could be modeled with simple artificial neurons. No learning mechanism, but foundational for neural logic.

- Turing (1950) : Reframed machine intelligence as behavioral imitation. No architecture, but set the field’s philosophical direction.

- Rosenblatt (1958) : Implemented the first trainable neural network, the Perceptron. Worked only for linearly separable problems.

The Learning Breakthrough

- Rumelhart et al. (1986) : Reintroduced backpropagation, enabling multi-layer networks to learn internal representations.

- LeCun et al. (1998) : Developed convolutional neural networks (CNNs), effective for image data due to spatial inductive biases.

- Hochreiter & Schmidhuber (1997) : Solved the vanishing gradient problem in RNNs with LSTM, enabling long-term memory in sequence tasks.

The Representation Era

- Hinton et al. (2006) : Proposed deep belief networks with layer-wise unsupervised pretraining, revitalizing deep learning.

- Kingma & Welling (2013) : Introduced VAEs for probabilistic generative modeling.

- Goodfellow et al. (2014) : Developed GANs, launching adversarial training for image and content generation.

The Sequence-to-Sequence Shift

- Sutskever et al. (2014) : Formalized encoder-decoder models for machine translation.

- Bahdanau et al. (2014) : Introduced attention mechanisms, allowing dynamic alignment in seq2seq models.

- Vaswani et al. (2017) : Removed recurrence entirely with Transformers, scaling to massive parallelism.

The Foundation Model Era

- Radford et al. (2018+) : GPT models demonstrated few-shot learning and generalization at scale using decoder-only Transformers.

- Devlin et al. (2018) : Introduced masked language modeling with bidirectional attention.

- Sutton & Barto : Reinforcement learning methods became core to model alignment and control (e.g. RLHF).

- Diffusion models (e.g., Ho et al. 2020): Enabled state-of-the-art image generation using probabilistic denoising.

Common Architectural Themes

- Feedforward vs. Recurrent vs. Attention-based: Early networks were feedforward, while RNNs added temporal structure. Attention eventually replaced both in most applications.

- Pretraining: Emerged with deep belief nets , peaked with GPT and BERT.

- Modularity: Encoder-decoder frameworks, multi-head attention, and residual connections improved learning and flexibility.

Outlook

As models scale further in parameters and multimodal input, architectural innovation continues. Sparse mixtures of experts, retrieval-augmented generation, and biologically inspired models all signal future directions.

Understanding this lineage is essential to navigating where AI is heading—and how its past continues to shape its future.

Further Reading

- All Papers and Architectures Articles

- Anthology of original papers

- Transformer , GPT , and BERT as key modern examples

- Tags:

- Papers

- Architectures